I’m reading a book recently called Transformer, BERT, and GPT: Including ChatGPT and Prompt Engineering by Oswald Campesato (2024).

The book is divided into 10 Chapters. Here is a summary of the fourth chapter (Transformer Architecture in Greater Depth).

Input tokens are converted into word embeddings (a map of words to real-valued vectors) and from that a positional encoding vector is created. The next step is the attention mechanism, a crucial part of the transformer architecture. RNN/LSTMs exposes on the final state whereas attention mechanisms access all other hidden states of an encoder (this was mentioned in Chapter 3).

Typically, there are six or twelve encoder components, each with two layers. The output of the first layer becomes input for the second layer. The decoder component also has six components each with three sub-elements (one is the output from the encoder.

The encoder component of the transformer is a vertical stack of encoder blocks. Each encoder processes its input and generates encoded output that is passed upward to the next component. The final (top-most) encoder in the stack contains the representation of the initial input sentence. This result is passed to every component of the decoder block.

Machine learning models cannot process words, so they must be formed into word embeddings (word2vec is an example of a single hidden layer embedding). The first step involves token encoding (which involves one-hot vectors which are essentially dummy coded categorical variables except instead of k – 1 categories it uses all k). The second step involves projecting the one-hot vectors to a lower dimensional space. The encoder then generates a hidden or “masked” state for the input tokens and another layer predicts the correct value for the masked token. If the objective is pre-training, then initial encoding layers are processed by subsequent layers to make predictions. Unlike word2vec, attention mechanisms can generate different embeddings for the same word that appears in multiple sentences.

Positional encodings are added to embeddings of tokens since attention mechanisms do not inherently have a sense of order or position. They have the same dimension as embeddings so can be added to them and can work with sequences of variable lengths.

Within the encoder of the transformer architecture, there is a multi-head attention (MHA) block and feedforward neural network (FFNN) in each layer. The MHA involves parallel attention heads, each working independently but capturing the relationship between tokens. The FFNN uses ReLU (rectified linear unit) or nonlinear Gaussian Error Linear Unit (GELU) to process information in the previous layers and maps it to a more complex space.

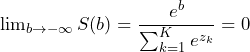

The decoder similarly contains multiple decoder blocks, position encodings and output embeddings. The output embedding is the bottom-most element of each decoder block (typically 6 or 12) and its counterpart is the input embedding in the encoder component. Above this element is the positional encoding, and above that is one or more decoder blocks. The decoder is a superset of the encoder because it contains the two sublayers as the encoder but has a third sublayer called the masked multi-head attention (MMHA) layer. MMHAs are backward-looking, meaning they cannot see the next token and must predict it (hence the name autoregressive). Both encoders and decoders have MHA blocks but only the decoder has an MMHA block (masked), which prevents the decoder from seeing the next word. Technically this is done by making the upper triangle of the matrix negative infinity which the softmax converts to 0. The softmax is defined as:

(1) ![]()

for ![]() elements (or classes) in the vector. The denominator can be thought of as a normalization constant to ensure the softmax produces a probability between 0 and 1. Plugging in

elements (or classes) in the vector. The denominator can be thought of as a normalization constant to ensure the softmax produces a probability between 0 and 1. Plugging in ![]() , we obtain

, we obtain  , since the denominator must be nonzero.

, since the denominator must be nonzero.

There are three main implementations of the transformer architecture, encoder only (for classification), decoder only (for language modeling) and encoder-decoder (for machine translation). Encoder only models are good for understanding input such as sentence classification and named entity recognition (e.g. BERT), they are not good for generating new sequences of different length. Decoder only models are autoregressive models such as GPT that are suitable for text generation. Encoder-decoder models (known as sequence-to-sequence models) are good for translation where we encode a sequence from one language and the decoder produces a sequence of a different length in another language.

Causal self-attention means that tokens can only access past tokens that belong to the same sentence. Encoder-decoder architectures require causal self-attention in the decoder because each autoregressive decoding step must depend on previous tokens. Decoder only architectures also require causal self-attention.

A unidirectional embedding processes the text in only one direct (typically left to right) and is well suited for text generation (e.g. GPT). A bidirectional embedding takes into account the tokens that precede and follow the token. Bidirectional embeddings are suited for sentence-level tasks such as rewriting or summarization.

There are two main types of transformer-based models: autoregressive and autoencoding. Autoencoders learn encodings whereas autoregressive models are used for fine-tuning. The most popular autoencoding model is BERT which performs unsupervised learning (meaning no ‘correct’ labels are provided to train the data) via a) masked layer modeling (MLM) and b) next sentence prediction (NSP). MLM replaces approximately 15% of input tokens with a [MASK] token and attempts to predict the masked tokens. The NSP task involves determining whether a pair of tokens appear in the exact order in a corpus of words. Hugging Face provides AutoClasses that simplify retrieval of an appropriate LLM. The transformer package provides three main groups AutoModel, AutoConfig, and AutoTokenizer. For example, an instance of AutoTokenizer belongs to a class of the appropriate tokenizer from the Hugging Face model hub. The goal is to abstract away the manual selection of parameters (e.g., the number of layers, attention heads, etc.) and tokenizers. Even more abstract that these AutoClasses is pipeline which automatically tokenizes the corpus of text (into subwords and then maps them into integers), generates the vector embeddings from the tokens and inputs these embeddings into the (neural network or transformer) model. For example, you can perform sentiment analysis out of the box, with just these simple lines of code:

from transformers import pipeline

nlp = pipeline('sentiment-analysis')This pipeline automatically selects a pre-trained model fine-tuned for sentiment analysis, processes the text into tokens/embeddings (pre-processing) and generates an output (post-processing). Other pipelines include text generation, question-answering, named entity recognition, summarization, translation and feature extraction.

Leave a Reply

You must be logged in to post a comment.